Can you trust LLM providers for enterprise personalization?

Why data sovereignty is critical for enterprise personalization

At a recent conference, my team gave a demo that compared Claude Sonnet’s built-in features against the capabilities of Intelligent Memory, our enterprise personalization product.

We asked Claude to play the role of two financial analyst personas (Ravi and Sophie) and analyze the same company report.

When asked whether to buy the stock,

Ravi, who focuses on quantitative financial modeling and technical analysis, received a "neutral" recommendation. The information was presented to him in tables with fundamental financial metrics.

Sophie, who prefers qualitative insights and concise summaries, received a "moderate buy" recommendation. The system highlighted aspects of the report that suggested growth potential beyond what the strict financial metrics indicated.

When asked the same set of questions, base Claude delivered generic responses that weren’t optimized for either audience.

Ultimately, Intelligent Memory allows Ravi and Sophie to receive different investment recommendations based on their individual profiles and risk appetite.

What most vendors call personalization is actually just lumping users into predefined categories such as job titles or departments (“roles”). But this essentially assumes that all users in a category have identical needs, which leads to generic responses that aren’t truly personalized.

LLMs effectively “stereotype” people into generalizations, fundamentally misunderstanding the nuances of how people actually are, even within the same role.

A company might have 1,000 sales reps, but they're not clones - they have different educational backgrounds, communication preferences, and skill levels. Some want detailed technical explanations, others prefer executive summaries. Some write formally, others casually.

Real personalization means understanding each individual's unique characteristics and adapting accordingly, not just applying a one-size-fits-all "sales rep" template to everyone with that title.

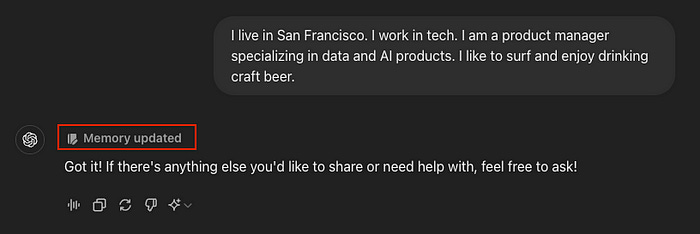

OpenAI recognized this early on, which is why ChatGPT users may notice the occasional “Memory Updated” notification.

ChatGPT saves relevant information (like names, preferences, ongoing tasks) into a memory system, likely a combination of RAG and vector/traditional search.

Other LLMs including Google Gemini are likely doing something similar except using other data sources they have access to, like your search history.

Personalization is fundamental to useful AI, but there’s a catch.

While OpenAI says memory content is used to improve user experience, and not to train base models (unless you've opted in), actions speak louder than words, and most enterprises won’t want to expose proprietary data to a walled garden.

Many global financial services firms in particular, handle highly sensitive data:

client information

proprietary trading algorithms

internal strategic memos and much more

While OpenAI Enterprise promises not to use customer data for model training, these contractual assurances don't address the fundamental issue: organizational data would still physically reside on servers outside the company's direct control.

This creates material compliance risks, particularly in regions with stringent data localization requirements, which is why personalization is not allowed in many European countries today.

Without RAG or access to proprietary databases, however, LLMs have an incomplete view of the user’s preferences.

So leaders are forced between limiting their team (users have to repeatedly reestablish context and preferences with each new application) and relinquishing control over proprietary enterprise data to a single vendor.

OpenAI says the memory content is used to improve user experience, and not to retrain the base models unless you've opted in.

Many pharma and FSI customers I speak with are exploring on-prem AI solutions that offer similar capabilities while maintaining complete data sovereignty.

Platform leaders want personalization, but they want it within existing infrastructure, where the firm maintains full control over data access, retention, and usage.

This approach allows users to leverage AI capabilities while ensuring sensitive information never leaves the firm’s security perimeter.

As a result of discovery calls with these customers, MemVerge has built a gateway between a chat frontend (like Open Web UI) and a local enterprise LLM (Llama, Deepseek, or any base model).

It runs in any VPC (virtual private cloud) and can be used a customer's own secure cloud environment or in a customer's on prem datacenter.

The gateway acts as a router for backend data sources and injects context and personalization all within your private network and data sovereignty controls.

We call it Intelligent Memory.

Intelligent Memory extracts useful features from user session logs that get stored in a database and are returned into the prompt for passing along to the LLM. This temporal approach allows enterprise AI models to “remember” important facts from previous messages and recall user preferences or project contexts later.

“Memory” can be stored in various formats (relational database, graph database, etc) depending on the relationships between data points, but the key is maintaining personalized information unique to each user that evolves over time.

Moreover, the gateway architecture ensures memory works across different LLMs and sessions, giving interchangeable LLMs interchangeable access to data sources, but kept entirely on-premise for enterprise security.

Intelligent Memory does not replace RAG but enhances how information is personalized with zero development for the end user. Enterprises simply install the gateway between their UI and LLMs, and personalization begins automatically without requiring SDK integration or code changes.

If you’d like a demo of Intelligent Memory, please email me — jing.xie@memverge.com.

The choice facing enterprise leaders today isn't just about AI capabilities – it's about who controls your organization's collective intelligence.

True enterprise personalization requires more than just categorizing users by role or department. It demands understanding each individual's unique communication style and decision-making approach.

But I believe the incremental cost of on-prem deployment pales in comparison to the potential vendor lock-in, competitive risks, and reputational damage that could result from a data privacy incident.

As AI becomes increasingly central to enterprise operations, the firms that maintain sovereignty over their personalization layer will be the ones that can truly leverage AI as a competitive differentiator.

The question isn't whether to personalize your enterprise AI – it's whether you'll own that personalization or rent it from someone else.