How can AMD close the gap with Nvidia?

What AMD can do to accelerate developer trust and build a community

Whenever I speak with platform engineering leaders, they’re pleasantly surprised when I tell them MemVerge works with the long-tail of GPU providers: Trainium, Inferentia, TPUs, and of course, AMD.

The reality is, Nvidia has extreme dominance in the market. And at an enterprise level, customers want to use other chips, especially AMD, because:

Demand for GPUs still exceeds supply

Platform teams don’t want to be locked-in to a single vendor

Compute is compute; enterprises want the most GPUs at the lowest possible cost

Most of the large FSIs and pharmaceutical companies I speak with already have a dedicated MI300 cluster or are planning to build one. But there are legitimate bottlenecks—both on the business side and on the technical—that hamper any sort of scaled workloads from running on AMD.

If AMD can solve these bottlenecks, there is a latent audience of platform engineers who will readily embrace MIX clusters and even make a business case to invest further into the AMD ecosystem.

AMD can kickstart a developer community — by making supply more accessible

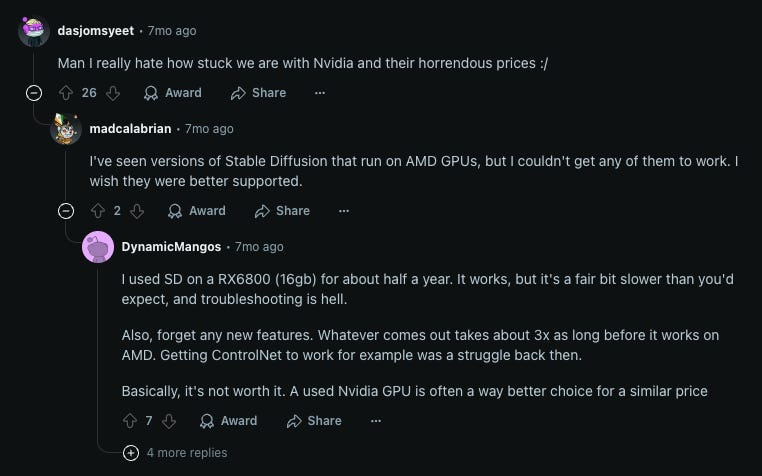

Developers are tinkerers and if AMD wants to build a community around the MIX line, they need to get their products in the hands of developers.

In one researcher’s words: “Today, you can’t buy MI anythings off the shelf. Azure is basically the only large hyperscaler offering Instinct GPUs and even then, you can’t just put in your credit card and get yourself an MI250X.”

“Maybe Oracle cloud has some, but even if you went as far as contacting sales, they’re often sold in packs of eight — far more than what an average developer might need for simpler workloads.”

Today, of the top three hyperscalers, only Azure offers MI300s. AWS and Google don't, which means literally two thirds of the modern developer install base are not able to even test and try MI300s for their workloads. That’s a massive TAM waiting to be unlocked.

AMD has an opportunity to solve virtualization at the software level

Resourceful platform engineering teams have tried to get around these supply-side challenges through virtualization and resource management software. If we can’t acquire more chips, let’s try to be more efficient around distributing our workloads instead.

But existing solutions, both for Nvidia and AMD are still not fully featured:

GPU Virtualization

While most ML/AI PhDs are familiar with SLURM (it being the scheduler of choice at most academic research organizations), SLURM lacks native GPU virtualization features, which makes it very difficult for platform teams in the F500.

Organizations using SLURM must allocate whole physical GPUs per task. This makes it difficult for enterprise platform teams to schedule urgent, short wall-time jobs without sacrificing long-running jobs.

In the most banal case, researchers are sitting idle, waiting for compute to start their jobs. And on the other extreme, platform teams have to embrace wastage whenever a long-job fails or needs to be deprioritized.

One AI researcher screenshared their SLURM cluster with me, where I saw wall times ranging from 4 hours to multiple days and 20 hours. Despite having 10+ nodes with 8 GPUs each, the researcher still couldn’t run anything.

Workload Preemption

Researchers frequently allocate more resources than necessary because they don’t know the next time they’ll get their job prioritized.

A user said to me “We don't realistically need a box with eight GPUs in it – but we could use a box with one. These Instincts have 128GB these days, that’s way more memory than what an average developer might need for a simple inference solution.”

Overprovisioning is also a form of wastage and enterprises are looking for a way to more effectively checkpoint and hot restart ML workloads, outside of the application level.

For example, if Researcher A could run their job for 10 hours, checkpoint & sleep, Researcher B could run their smaller job for 1 hour, complete it, and then restore Researcher A’s job.

This type of “sharing” would unlock efficiency gains at the organizational level that would warrant a strong business case for an AMD cluster investment.

AMD has an opportunity to unbundle Nvidia’s software pricing

The open source community has tried to solve some of these limitations with projects like Kueue and of course, Nvidia presciently acquired RunAI to help their own customers more effectively manage their workloads.

But the RunAI acquisition produces yet another set of business problems in the form of vendor lock-in and market leader pricing.

One platform engineer shared with me: “They bought RunAI but only open-sourced the backend. The user interface and higher level abstractions are ultimately how you can use it, so it’s pay to play if you’re serious about orchestrating your workloads”.

And another research engineering leader shared with me that when they first started, the software to operate Nvidia GPUs was a few dollars per card per year. Today, it’s bundled into a single SKU of $5,000 per package. This pricing strategy benefits Nvidia as the market leader, but is forcing engineering leaders to look for alternative solutions.

Customers are open to alternatives

I spoke with a neocloud founder yesterday, who shared with me, “in the beginning, we thought we could price our GPUs at a premium, building on our brand and connections in the industry, but it turns out, customers just ultimately care about price and availability”.

With demand for better availability and pricing, AMD has a real opportunity to catch up to the market leader. Dylan Patel and others have mused that AMD's hardware could catch up to Nvidia’s by as soon as 2026.

As hardware reaches parity, I see the opportunity to build or partner with ISVs on solutions that enable scheduling, fractionalization, and preemption.

The majority of enterprises I speak with have a large Nvidia cluster and smaller AMD test cluster — and they all struggle with the same things: job scheduling, over-provisioning, idle researchers, and wastage due to node and OOM failures. Some of the most sophisticated F500 companies standing up MI300 clusters can’t support mixed workloads and would be more than happy for an AMD-branded solution.

To paraphrase Mark Zuckerberg, “as soon as you start getting more intelligence in one part of the stack, you move on to the next, different set of bottlenecks. This is essentially how engineering works – once you solve one bottleneck, you get another bottleneck”.

AMD has shown a willingness to adapt with strategic appointments of key leaders in executive GTM roles.

On the product side, AMD is hiring for software talent with strong ownership instincts, who will undoubtedly be tasked with building creative solutions up the stack.

It’s not the first time AMD has been in the underdog position. If they can build, buy, or partner — to achieve a software equivalent of NVAI — they’ll be a major contender in the GPU race.