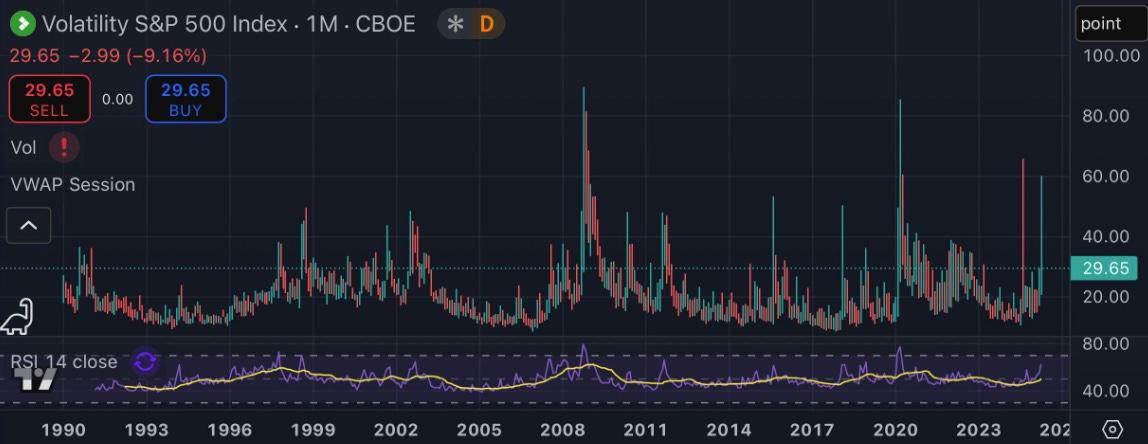

I spoke with the engineering leader at one of the largest trading platforms last week. One thought stuck with me: “computational usage tends to be higher during periods of market volatility — irrespective of whether traders we support are growing top line revenue”.

In some cases, quants might even make the wrong trades during market volatility, causing top line to decrease, but just to keep up with a systematic or quantitative strategy, we still literally need more computers.

Firms have increased OPEX without guaranteed profitability, which creates an expectation for HPC platform teams to maximize efficiency.

We don’t decide when markets get volatile, but platform teams are expected to do whatever they can to make sure

1) jobs complete on time and

2) compute costs don’t blow up the PnL for the year.

In short, Volatility comes with a price.

Market volatility rewards proactive platform engineering teams

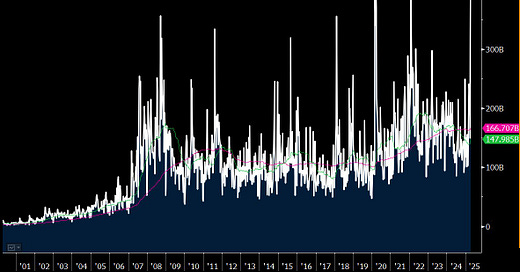

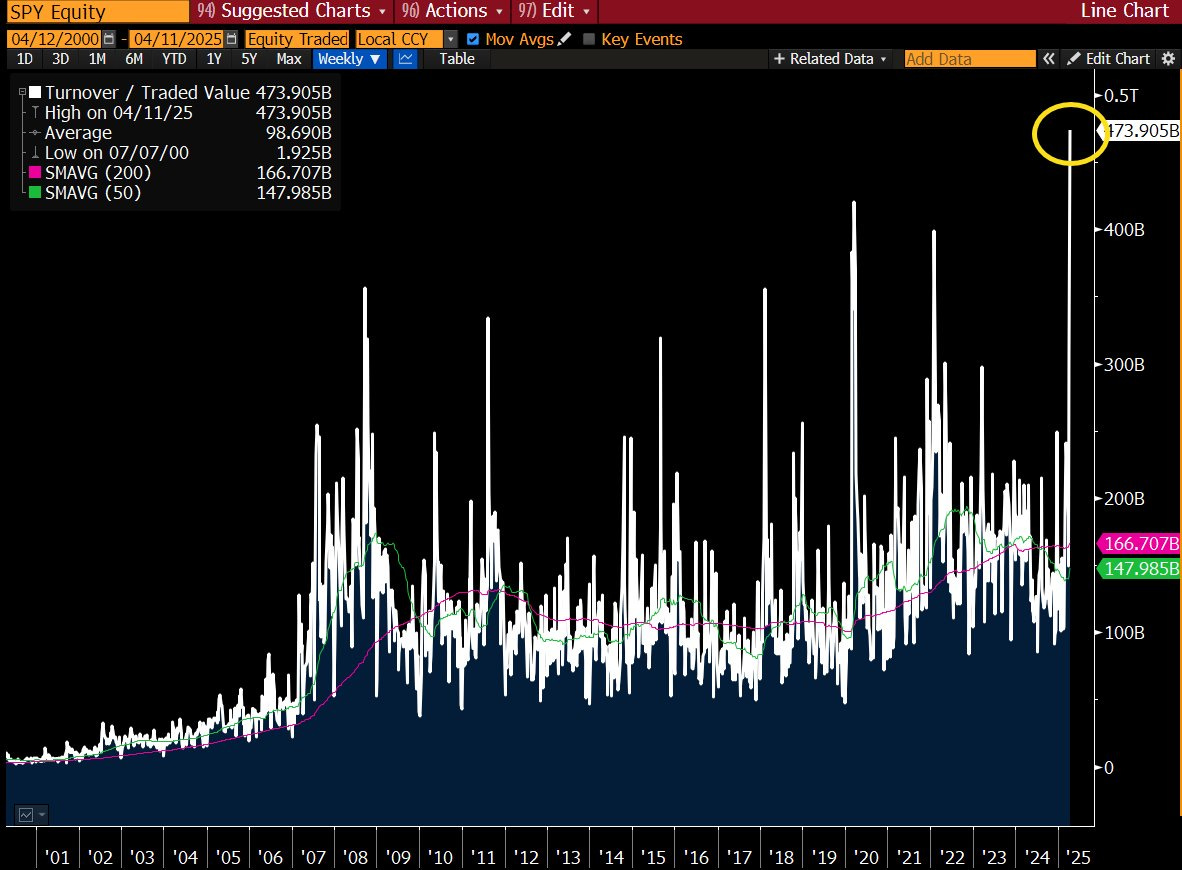

Last week, we hit record highs across multiple asset classes, which means ML teams, Quant teams, and any systematic shop backtested through massive volumes of structured and unstructured data.

The big moves affected multiple asset classes, not just equities.

Given these record moves in the market and the sheer amount of data to be analyzed, can your platform handle the increase in operational demand? Do you have enough compute at your fingertips? Can your scheduler successfully handle an increase in highly time-sensitive jobs? How does this affect your PnL?

All other things being equal, increased trading volumes elevate the role of the platform engineering team.

In a period of extended volatility, they're the team that can help make a difference.

The cloud can scale to meet trading demands

There is also an advantage for firms with a cloud HPC environment, and probably an inherent disadvantage for firms solely operating on-prem, or firms lacking a mature, cost-efficient cloud architecture. I shared specifics on my learnings trying to support enterprise HPC schedulers over the past here in a recent post.

Teams with an AWS or GCP contract are better positioned to handle all of this extra volume, albeit with an added cost, because they can leverage a combination of spot and on demand instances. On-prem shops, or firms without cloud contracts probably suffered from resource constraints.

For funds operating on-prem exclusively, record trading volumes likely meant very long jobs queues in SLURM, HT Condor, LSF, and Symphony, and impatient, unhappy traders. We developed a solution for these funds to use their traditional schedulers on AWS and GCP when necessary and to do it cost efficiently with Spot instances by leveraging MemVerge transparent checkpointing.

We’re just in the first quarter

If Trump’s first presidency was an accurate baseline, the remaining three and a half years will be more volatile than the Biden years of the past.

And irrespective of politics, the structural cost of compute platforms are going to be higher for the foreseeable future.

My recommendation then for platform leaders is to:

Embrace cloud, including a strategy for using spot VMs for both CPUs and GPUs. Hyperscalers are the most cost effective way to “burst” and extend the capability of your on-prem HPC cluster.

Implement transparent checkpointing software to sleep, restart, and reprioritize jobs. Did you know it is even possible to move jobs back and forth between cloud and on-prem based on cluster utilization?

Work with finance and planning teams to model out what a more volatile next three years will really cost the firm. Proactively update your operative budgets so that there are no surprises and you can ask for the necessary resources to enhance your HPC platforms ahead of the next vol spike.

Portfolio managers with active and discretionary strategies will need to stay current on the latest market moving news without sleep depriving their entire analyst team. Just look at the April 7th tariff pause gaff from Trump’s staffer and the market reactions as an example. Hint: Up your AI agent capabilities firm-wide.

As always, I welcome any commentary both in the comments and over email jing.xie@memverge.com.

If this post was helpful or interesting to you, please help me to grow this substack by sharing it with others.

Good luck out there.